Blog

How to Generate and Renew an SSL Certificate using Terraform on AWS

Recently, a Mission customer requested that all their environments utilize auto-generated SSL certificates for end-to-end encryption, i.e. end-user, to loadbalancer, to web-server. This presented a significant challenge given that we were already deploying a complex workload via Terraform which included Windows EC2 Instances in an autoscaling group, ECS containers, and an Application Load Balancer. First, We investigated generating the certificates on the EC2 instances with letsencrypt and then upload those certificates to S3 buckets, import them into the AWS Certificate Manager and attaching them to the load balancers, but this would require a significant amount of overhead and limited interconnectivity with our terraform workload. Additionally, renewing certificates for tens of environments would be quite onerous.

After some research, we discovered that Terraform supports the acme provider, which allows us to call the resource acme_certificate and generate a Let's Encrypt SSL certificate with many customizable options. We were able to accomplish the customer's request by creating a Terraform module that uses the acme provider to generate the SSL certificate, import it into AWS ACM, attach it to an application load balancer, and upload all certificate files (.pem, .key, .pfx, and chain) to an S3 bucket. Finally, we used our Terraform to render custom userdata scripts for each environment which would download the appropriate certificate in .pfx format from S3, import the certificate into the Windows Certificate Store, and finally, create an HTTPS binding in IIS.

Certificate renewals were also now relatively painless. When a new certificate needed to be renewed the client would run a Terraform apply command which would automate the renewal, update the appropriate files in S3, ACM, and Load Balancer. Then the Auto-Scaling Group would be cycled for new EC2 instances that would pull the new certificates from S3 at startup and import them into IIS. Here are the steps to generate the SSL certificate and renew them as well.

Steps:

- Create Terraform Providers for LetsEncrypt/Acme, tls.

- Create certificates and private keys.

- Import the generated Certificates into ACM

- Attach the Certificates to the Load Balancer(s)

- Copy the Certificates to S3 with common folder structure and filenames

- Utilize Userdata/CICD pipeline to retrieve the certificates

- Import certificates into EC2 host’s certificate store

- Renew Certificates via Terraform Apply.

Procedure

- Create the Terraform Providers for LetsEncrypt/Acme. This is the most important part and required some customization because Terraform by default cannot find the acme provider source. The tls provider allows us to generate our private key.

terraform {

required_providers {

acme = {

source = "vancluever/acme"

}

}

}

provider "acme" {

server_url = var.server_url

}

provider "tls" {}

Note: if you want your certificates to be trusted by browsers be sure to set the server_url variable to the production server for acme/letsencrypt (https://acme-v02.api.letsencrypt.org/directory)

- Establish resources to create certificates and private keys based on Route53 Domains (other DNS providers can be used. See here.) Note: you will need a User Access Key and Secret Access key with full access to Route53. These can be stored in Secrets Manager to avoid displaying them in plain text in your TF code, or you can create these resources in the TF code itself with the aws_iam_access_key and resources as we did below:

resource "tls_private_key" "registration" {

algorithm = "RSA"

rsa_bits = var.account_private_key_rsa_bits

}

resource "acme_registration" "registration" {

account_key_pem = tls_private_key.registration.private_key_pem

email_address = var.email_address

dynamic "external_account_binding" {

for_each = var.external_account_binding != null ? [null] : []

content {

key_id = var.external_account_binding.key_id

hmac_base64 = var.external_account_binding.hmac_base64

}

}

}

resource "acme_certificate" "certificates" {

for_each = { for certificate in var.certificates : index(var.certificates, certificate) => certificate }

common_name = each.value.common_name

subject_alternative_names = each.value.subject_alternative_names

key_type = each.value.key_type

must_staple = each.value.must_staple

min_days_remaining = each.value.min_days_remaining

certificate_p12_password = each.value.certificate_p12_password

account_key_pem = acme_registration.registration.account_key_pem

recursive_nameservers = var.recursive_nameservers

disable_complete_propagation = var.disable_complete_propagation

pre_check_delay = var.pre_check_delay

dns_challenge {

provider = var.dns_challenge_provider

config = {

AWS_ACCESS_KEY_ID = aws_iam_access_key.letsencrypt_access_key.id

AWS_SECRET_ACCESS_KEY = aws_iam_access_key.letsencrypt_access_key.secret

AWS_DEFAULT_REGION = var.region

AWS_HOSTED_ZONE_ID = var.aws_hosted_zone_id

}

}

}

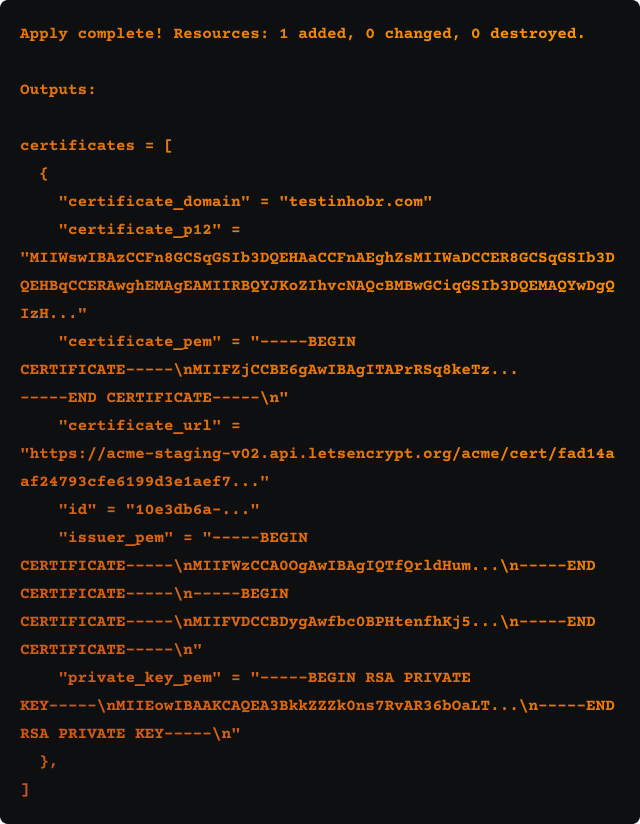

You can use the terraform apply to export the certificate for a better view and it will look like the example below:

- Import the generated Certificates into ACM

This resource uses the outputs of the certificate to automatically populate a ACM certificate Import fields which will create the entry in ACM. We can then output the ARN of the Certificate for our loadbalancer.

resource "aws_acm_certificate" "cert" {

private_key = acme_certificate.certificates[0].private_key_pem

certificate_body = acme_certificate.certificates[0].certificate_pem

certificate_chain = acme_certificate.certificates[0].issuer_pem

depends_on = [acme_certificate.certificates,tls_private_key.registration,acme_registration.registration]

}

- Attach the Certificates to the Load Balancers

By setting the ARN of the ACM certificate as an output in terraform this allows us to attach this certificate to the listeners on our loadbalancer. Note the acm_certificate_arn output which is referenced in our alb module:

output "acm_certificate_arn" {

value = aws_acm_certificate.cert.arn

}

module "alb" {

source = "../../modules/alb"

name = "${local.local_env}-alb"

environment = "test01"

load_balancer_type = "application"

...

https_listeners = [

{

port = 443

protocol = "HTTPS"

certificate_arn = module.cert.acm_certificate_arn

target_group_index = 0

}

]

...

}

- Stage the certificates in S3 with common folder structure and filenames.

We used s3_object resources to place the plaintext certificate, private key and certificate chain, and the pfx files into an s3 bucket to be used by any web servers that need to decrypt using these files. For our purposes we decided to organize the certificates by site url. The file names will remain static, but the s3 prefix/folder name will be the site url for the certificate:

#Certificate pem

resource "aws_s3_bucket_object" "cert_pem" {

bucket = var.s3_cert_bucket_name

key = join("", ["/", var.s3_cert_foldername,"/cert.pem"])

content = acme_certificate.certificates[0].certificate_pem

server_side_encryption = "aws:kms"

}

#Chain pem

resource "aws_s3_bucket_object" "cert_chain" {

bucket = var.s3_cert_bucket_name

key = join("", ["/", var.s3_cert_foldername,"/cert.chain"])

content = acme_certificate.certificates[0].issuer_pem

server_side_encryption = "aws:kms"

}

#Private key

resource "aws_s3_bucket_object" "cert_key" {

bucket = var.s3_cert_bucket_name

key = join("", ["/", var.s3_cert_foldername,"/cert.key"])

content = acme_certificate.certificates[0].private_key_pem

server_side_encryption = "aws:kms"

}

#PFX

resource "aws_s3_bucket_object" "cert_pfx" {

bucket = var.s3_cert_bucket_name

key = join("", ["/", var.s3_cert_foldername,"/cert.pfx"])

content = acme_certificate.certificates[0].certificate_p12

server_side_encryption = "aws:kms"

}

- Utilize Userdata/CICD pipeline to retrieve the certificates

This script will vary depending on your application and operating system, but our implementation was fairly simple: we used aws s3 cp command to retrieve the files and add them to the appropriate folder on your EC2 instance.

aws s3 cp --recursive s3://$(S3BucketName)/$(CertURL) c:\certs

- Import certificates into certificate store and IIS

Here, we simply used powershell commands to set the path of our certificate store and the location of our cert files and import them into the Windows Certificate Store. Then we set the HTTPS binding in IIS using the thumbprint variable:

### import the certificate into the Windows Certificate Store

Set-Location -Path cert:\localMachine\my Import-PfxCertificate -FilePath c:\certs\cert.pfx

$Thumbprint = (Get-ChildItem -Path Cert:\LocalMachine\My | Where-Object {$_.Subject -match "$(CertURL)"}).Thumbprint;

Write-Host -Object "My thumbprint is: $Thumbprint";

Write-Host "##vso[task.setvariable variable=Thumbprint]$Thumbprint"

### Create HTTPS binding using the cert

New-IISSiteBinding -Name "TestSite" -BindingInformation "*:443:" -CertificateThumbPrint $Thumbprint -CertStoreLocation "cert:\localMachine\my" -Protocol https

Note: If a new certificate is generated, our AutoScaling Group will need to recycle the EC2 instances so that the UserData executes and installs the new certificate.

- Certificate renewals

Finally, by re-running the terraform pipeline, which includes Terraform plan and apply commands, any time the min_days_remaining variable reaches its threshold, Terraform will execute a certificate renewal and thus overwrite the files in s3 and ACM, just be sure that your loadbalancers and EC2 instances update accordingly via the Autoscaling Group recycling EC2 instances, etc, using the renewed certificates in S3 and ACM.

Conclusion

This implementation provided both the convenience of a robust Infrastructure as code framework with the added benefits of end-to-end encryption, web application customization, and the convenience of automated certificate renewal.

FAQ

How can you ensure the security and confidentiality of the SSL certificates when storing them in S3 buckets, especially considering using AWS KMS for encryption?

Ensuring the security and confidentiality of SSL certificates stored in S3 involves strict access controls and robust encryption. AWS Key Management Service (KMS) is pivotal for encrypting the certificates at rest, safeguarding them from unauthorized access. It is critical to meticulously manage permissions, allowing only trusted roles and users access while ensuring that the certificates are encrypted in transit, maintaining their confidentiality and integrity.

What are the best practices for managing the lifecycle of SSL certificates in a large-scale environment where multiple certificates are in use across various services?

A strategic approach is essential for managing SSL certificates' lifecycle in large-scale environments. Automating renewals, keeping a comprehensive inventory, and conducting regular validations are key. This proactive stance prevents service disruptions by ensuring certificates are consistently up-to-date and deployed correctly, thus maintaining secure communications across services.

Can the Terraform setup for SSL certificate generation and renewal be integrated with a monitoring system to alert administrators before the certificates expire or if there are issues during the renewal process?

Integrating Terraform with a monitoring system to oversee SSL certificate renewals can enhance operational reliability. Such integration enables proactive alerts for impending expirations and immediate notifications of any issues during renewal. This ensures administrators can respond swiftly, maintaining uninterrupted and secure service connections.

Author Spotlight:

Michael Spitalieri

Keep Up To Date With AWS News

Stay up to date with the latest AWS services, latest architecture, cloud-native solutions and more.