Blog

How to Enable Spot Instances for Training on Amazon SageMaker

Amazon SageMaker Series - Article 4

We are authoring a series of articles to feature the end-to-end process of data preparation to model training, deployment, pipeline building, and monitoring using the Amazon SageMaker Pipeline. This fourth article of the Amazon SageMaker series focuses on how to Enable Spot Instances for Training on Amazon SageMaker.

Amazon SageMaker makes it easy to take advantage of the cost savings available through EC2 Spot Instances. By enabling Spot Instances for your training jobs on SageMaker you can reduce your training cost by up to 90% over on-demand instance pricing. This blog gives a comprehensive overview of Spot Instances and how to enable Spot Instances for training on Amazon SageMaker.

What are Spot Instances?

AWS provides different instance purchasing options to optimize costs based on needs, such as on-demand instances, Spot Instances, reserved instances, etc. AWS provides Spot Instances to sell excess EC2 capacity at a steep discount compared to on-demand pricing. Using Spot Instances for long-running training jobs can help lower your training costs by taking advantage of this discounted pricing.

AWS may reclaim spot Instances at any time, so Spot Instances are only suitable for some workloads. Your Spot Instance runs whenever capacity is available. However, AWS can “pull the plug” and terminate Spot Instances with a two-minute warning. These interruptions occur when AWS needs the capacity for reserved instances or on-demand instances.

For Spot Instances, the hourly price for each instance type fluctuates based on current availability and demand in each region. You can find current Spot Instance prices on AWS’s Spot Instance pricing page and the Spot Instance advisor page that shows the typical saving percentage of each instance type. These resources will help you to determine the best instance type, when to run your training job, and calculate how much you will save compared to on-demand prices.

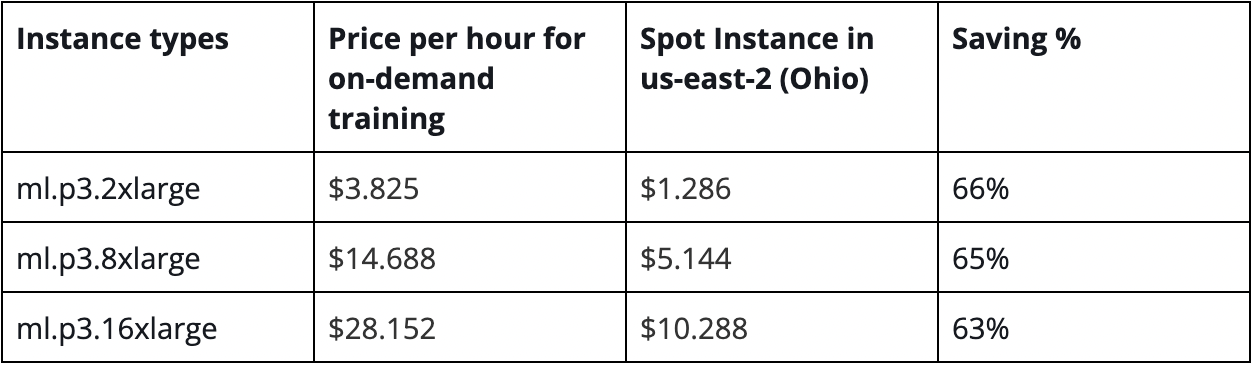

In particular, for SageMaker training, you can find the on-demand training instance pricing page here. The below table shows an example pricing comparison of three instance types and the corresponding saving percentage.

When to consider using Spot Instances for machine learning model training?

Spot Instances are ideal for stateless or fault-tolerant workloads due to the possibility of interruption. On average, Spot Instances are interrupted around 5% of the time or less. However, the likelihood of your instance being interrupted fluctuates based on the instance type, region, and availability zone.

Training for many machine learning models includes multiple epochs or batches between which your data and progress may be saved to a file after the calculation is done in memory. Additionally, many frameworks provide methods to checkpoint progress. By saving these checkpoints to S3, your SageMaker training jobs can become fault tolerant, making them a great candidate for use with Spot Instances.

SageMaker makes it easy to take advantage of this with built-in support for Spot Instances in training jobs. You can find details and additional resources in the AWS documentation for Managed Spot Training in Amazon SageMaker.

Many of the provided SageMaker Estimator classes already have checkpointing functionality built-in to take advantage of training with Spot Instances, including XGBoost, MXNet, TensorFlow, and PyTorch. AWS has provided a GitHub repository with sample notebooks that show how to use Spot Instances for training with SageMaker-provided algorithms.

How to enable Spot Instances for deep learning training in SageMaker?

This section will show an example of enabling Spot Instances when training a PyTorch model. We use the Managed Spot Training option in our training job definition. This definition allows us to specify which training jobs use Spot Instances and a stopping condition that specifies how long SageMaker waits for the job to run when using Spot Instances.

The code below shows the definition of a PyTorch estimator with the necessary parameters, along with a custom training script. checkpoint_s3_uri is used to specify the S3 URI where checkpoint files will be persisted during training. use_spot_instances, max_run, and max_wait need to be configured correctly to enable Spot Instances.

# Define the model estimator

pytorch_estimator = PyTorch(

entry_point="train.py",

source_dir="code",

role=role,

framework_version= “1.8.0”,

py_version=python_version,

instance_count=train_instance_count,

instance_type=train_instance_type,

hyperparameters={"num_epochs": train_num_epochs,

"learning_rate": train_learning_rate,

"max_iter": train_max_iter,

"s3_path": s3_path,

},

checkpoint_s3_uri=s3_path,

use_spot_instances = train_use_spot_instance,

max_run=max_run,

max_wait=max_wait,

metric_definitions=metric_definitions,

disable_profiler = True,

sagemaker_session=pipeline_session

)

To enable training with Spot Instances, we need to set values for max_wait and max_run; make sure to set the max_wait larger than max_run. As seen in the SageMaker Estimator Documentation, max_run refers to the maximum duration of the SageMaker Training job, in seconds (default: 24 * 60 * 60); after this amount of time, Amazon SageMaker terminates the job regardless of its current status. On the other hand, max_wait refers to the maximum amount of time to wait for both the training job, and time waiting to get a Spot Instance (default: None). Similarly, SageMaker will stop waiting for the training job to complete if this time limit is exceeded. AWS's maximum value for max_run is 28 days, although your AWS account might have a higher limit requested.

The recommended best practice is to set max_run larger than the estimated length of the training job with additional buffer time. In this example, since the training job takes about 10 mins to finish, max_run is set to be 30 mins (1800 seconds). See the example below.

train_use_spot_instance = true

if train_use_spot_instance:

max_run = 1800

max_wait = 1805

else:

max_run = 86400 # (default value)

max_wait = None # (default value)

In addition to the parameter settings in the estimator, you also need to make sure you configure your training script to save checkpoints and resume from a checkpoint file. The PyTorch example from the GitHub repository mentioned above has a PyTorch training script that includes these necessary components for checkpointing. These components, in turn, enable fault-tolerant training with Spot Instances.

- A checkpoint path should be added as an input argument to the training script. This path is where the checkpoints will be saved locally in the training container. Any files in this directory will be saved to S3 if the training instance is interrupted and will automatically be loaded again when the training job resumes. This is done in the main function of the training script, line 199.

- The checkpoint path should be created if it does not exist so that we can save our checkpoint files here. In the provided script, this is done as part of the _train function, lines 50-54.

- Before beginning training, we check if an existing model checkpoint that should be loaded. Again, it is done in the _train function, lines 99-103.

- After each training epoch, we should save a model checkpoint. Again, the _train function, line 128, does this.

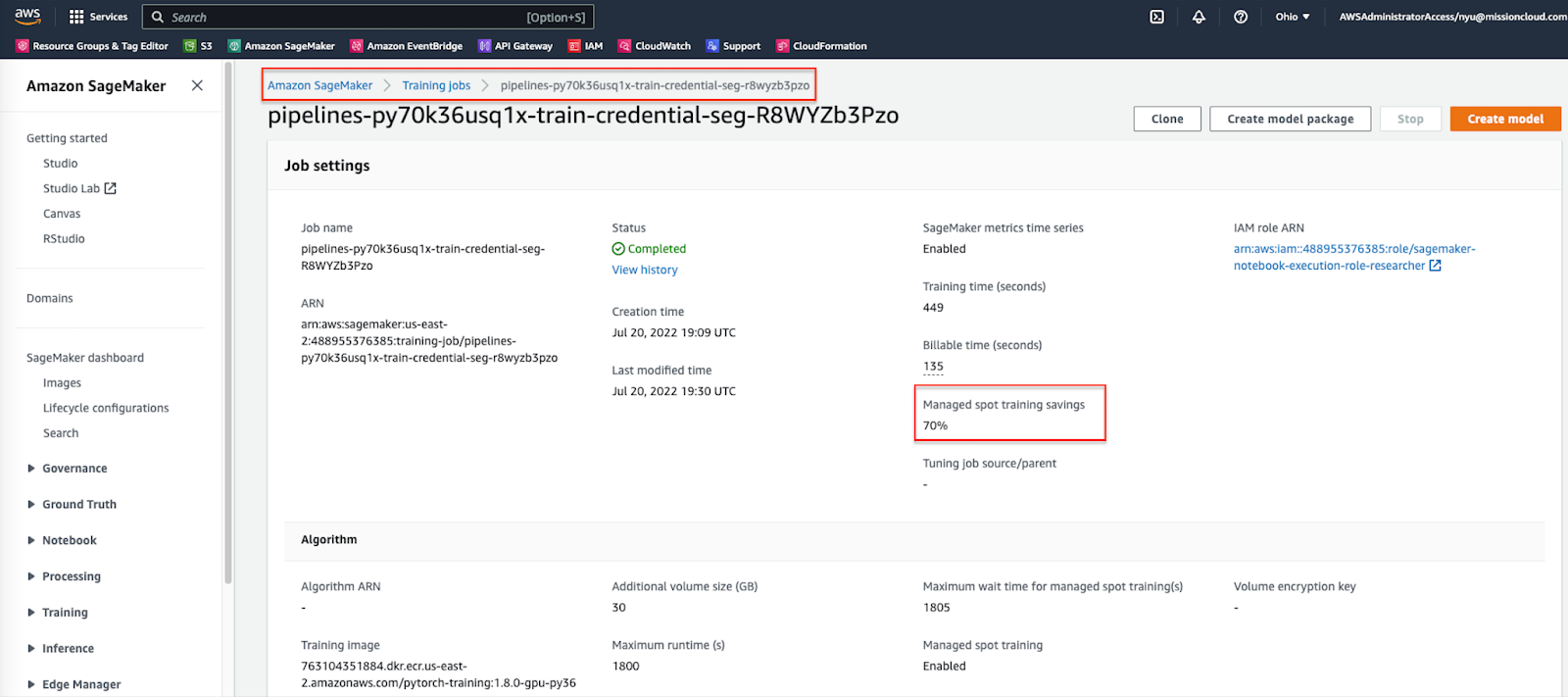

After configuring the settings correctly and running the training job, you can find the saving percentage under the training job on the SageMaker console as below shows.

Conclusion

Spot Instances provide discounted pricing on unused EC2 capacity in exchange for the possibility of interruption. Amazon SageMaker allows you to take advantage of these cost savings for training jobs with minimal code changes. By using Amazon SageMaker Managed Spot Instances, you can save up to 90% in training costs for your ML Models.

Contributing authors:

Jo Zhang, Senior Partner Solutions Architect, AWS

Ryan Ries, Practice Lead, Mission Cloud Services

Kris Skrinak, Global Machine Learning Segment Technical, AWS

FAQ

- How can one handle Spot Instance interruptions more effectively to minimize the impact on ML model training?

To handle Spot Instance interruptions effectively, it's recommended to architect your training jobs to be interruptible. This includes frequently saving checkpoints to durable storage, so you can resume from the last checkpoint rather than starting over.

- What are the limitations of using Spot Instances for SageMaker training regarding data size or training duration?

The limitations of using Spot Instances for SageMaker training can vary based on availability and demand. Depending on the current spot market, there might be restrictions on how long instances can be used or how much data you can process.

- How does SageMaker's Managed Spot Training feature compare with traditional on-demand instance training in terms of overall time-to-train for a typical machine learning model?

- Managed Spot Training in SageMaker typically offers cost savings over on-demand instances, with the trade-off being the potential for training interruptions. These interruptions can lengthen the overall time to train, though this varies based on instance availability and the job's ability to resume effectively.

Author Spotlight:

Na Yu

Keep Up To Date With AWS News

Stay up to date with the latest AWS services, latest architecture, cloud-native solutions and more.